Write Us

We are just a call away

[ LET’S TALK AI ]

X

Discover AI-

Powered Solutions

Get ready to explore cutting-edge AI technologies that can transform your workflow!

There is no doubt that AI is super fast. Yet, this speed also brings bigger risks. These risks, like flawed outputs, hidden biases, or unsafe behaviors in AI models, can impact businesses and users.

To fix this issue, Anthropic has introduced autonomous AI agents built for safety oversight in models like Claude.

“Anthropic’s new autonomous AI agents audit models like Claude to detect hidden risks, unsafe behaviors, and flawed outputs. They’re setting a new benchmark for AI safety. They work like tireless safety inspectors who use automated AI auditing to find issues before they cause harm. It’s like using AI to audit AI.”

This approach signals a major change in AI governance, where AI itself becomes the watchdog for AI. In this blog, we’ll explore how these AI safety agents operate, what sets them apart from traditional AI auditioning tools, and how businesses can leverage them.

AI safety agents are smart, autonomous systems that check if other AI models work safely. They are designed to spot harmful outputs, hidden patterns, and risky behaviors before those issues reach users.

Unlike traditional tools, these agents can run automated AI auditing continuously, without human intervention. They help companies meet compliance rules, protect brand trust, and keep AI systems aligned with ethical goals.

Traditional AI auditing tools work with set rules and manual reviews. They often run checks at fixed times and need human oversight. This makes them slower and less adaptable to new risks.

AI safety agents are different. They are autonomous AI agents that run 24/7. They learn, adapt, and spot issues in real time. They can test, evaluate, and challenge AI models on their own. With automated AI auditing, they find hidden risks faster than static tools.

For enterprises, this means better protection, quicker fixes, and stronger AI safety solutions without depending on constant human input.

👉Suggested Read: Power of Multi-Agent AI Systems

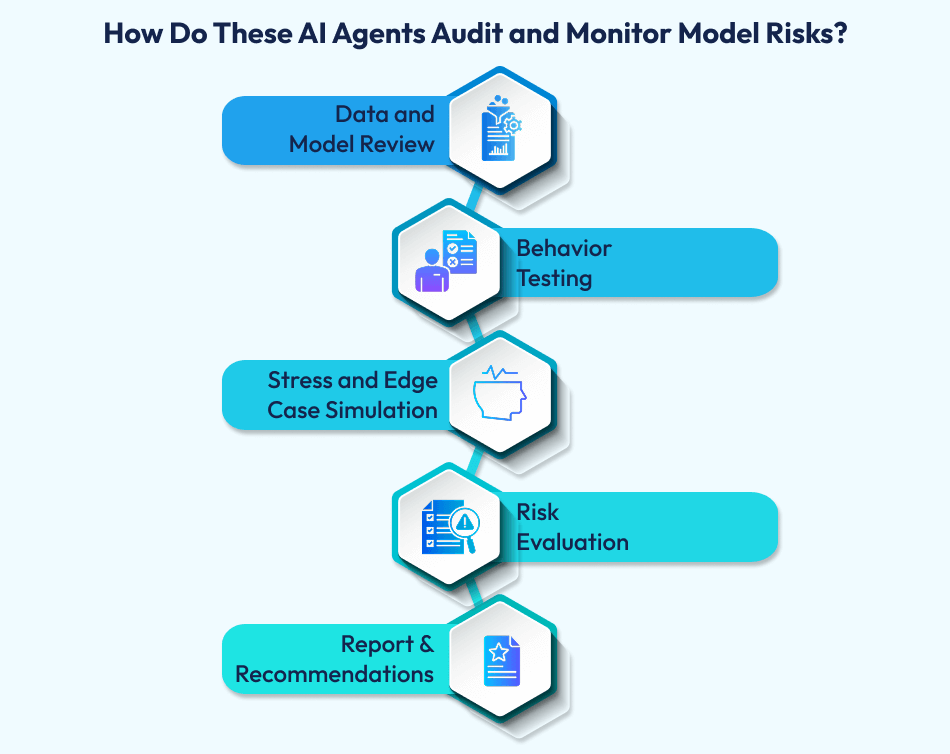

The AI model auditing process checks if an AI system is safe, fair, and reliable. With AI agents to audit AI models, this process is faster and more accurate.

The autonomous AI agents start by scanning the model’s training data. They look for bias, toxic content, or sensitive information.

For example, in a finance chatbot, they might detect patterns that give unfair loan suggestions.

AI safety agents then interact with the AI model. They ask different types of questions and record responses. If the model gives harmful or false outputs, the agents flag them instantly.

For example, in a healthcare app, they can spot unsafe medical advice.

Here, red-teaming agents push the model to its limits. They try to trick it into revealing private data or producing dangerous content.

This is key in automated AI auditing, as real attackers often use these tactics.

Evaluation agents score the model on accuracy, compliance, and ethical standards. In enterprise AI safety solutions, this score helps decide if the model is ready for public use.

The agents create a detailed report. It lists risks, examples, and fixes.

For example, if a generative AI development company tests a marketing AI, they might recommend new content filters.

With this process, autonomous AI agents for safety make sure AI models meet business, legal, and ethical needs. They turn AI auditing from a one-time task into a continuous, proactive system.

If you want to protect your AI models, then connect with Techugo, one of the trusted top AI app development companies for enterprise-grade AI safety solutions. Get in touch with us today.

| Agent Type | Main Function | Use Case Example |

| Investigator Agent | Deep behavior analysis | Finds hidden goals or unsafe patterns in an aligned legal AI model |

| Evaluation Agent | Structured testing for truthfulness and bias | Flags biased hiring suggestions in an HR recruitment AI |

| Red-Teaming Agent | Adversarial stress and attack simulation | Attempts to make a banking chatbot reveal confidential account details |

| Super-Agent Layer | Combines signals from all agents for full audit | Delivers a complete safety report for enterprise AI safety solutions |

| Safety Layer | Description | Purpose |

| Classifier Filtering | Screens and blocks unsafe prompts or outputs | Lowers the chance of harmful or non-compliant content being shared |

| Autonomous Audit Agents | AI agents to audit AI models with human-like checks | Spots misalignment, unsafe actions, and risky responses |

| Offline Evaluation | Scheduled reviews with human oversight | Confirms long-term accuracy and safe behavior |

| Threat Intelligence Feed | Tracks external threats, abuse patterns, and risk trends | Strengthens defenses before issues occur |

| Bug Bounty Program | Rewards for finding system weaknesses | Engages the community to improve enterprise AI safety solutions |

Yes. AI safety agents are not limited to Anthropic’s own models.

They can work with many AI systems across different industries. The key is the AI integration model, which allows these agents to connect with other platforms.

The same applies in healthcare.

“In healthcare, AI agents for auditing AI models could scan a hospital chatbot for unsafe medical suggestions. In finance, they could block risky investment tips or flag fraud-prone responses.”

— Ankit Singh, COO, Techugo

Because they run automated AI auditing, these agents can adapt to new risks quickly. They don’t just check for errors; they monitor for misuse, bias, and alignment issues in real time. This makes them valuable in regulated industries like banking, insurance, or pharmaceuticals.

For businesses, this flexibility means they don’t have to rebuild safety systems from scratch. They can hire artificial intelligence developers from Techugo to integrate these agents into their current workflows.

Even the best mobile app development companies can deploy them inside customer-facing apps for added security.

In short, these autonomous AI agents for safety are not just for Anthropic’s ecosystem. They can protect any AI system where trust, compliance, and safety are critical.

Autonomous AI agents are changing how businesses secure their AI systems and overall operations. They work tirelessly, running automated AI auditing without any downtime. Here’s a detailed look at why they matter for protecting business operations.

Human teams cannot monitor AI outputs every second. But AI agents can audit every second. They run continuous checks, scanning for unsafe language, security vulnerabilities, or malicious use.

This constant vigilance helps businesses avoid PR crises, legal trouble, and customer distrust.

Bias in AI can lead to discrimination, unfair treatment, and reputational damage. AI safety agents can test models against compliance frameworks like GDPR or HIPAA.

This is a core part of enterprise AI safety solutions, ensuring both ethical and legal compliance.

Manual reviews are prone to mistakes. Auditors may overlook subtle patterns or complex correlations.

Autonomous AI agents for safety can run millions of tests at speeds no human can match. They spot risks in early stages, before they become major problems. This reduces operational downtime and financial loss.

In high-risk industries, delays can be costly. These agents can immediately block unsafe actions.

This instant action is a big upgrade from traditional AI model auditing, which often happens after the fact.

Every industry faces unique AI risks.

Techugo, a trusted AI agent development company, can customize safety agents to fit each sector’s needs. Their AI experts can even integrate them into mobile or web apps.

To hire out artificial intelligence engineers, contact us now.

Once integrated, these agents run with minimal human intervention. They cut down the need for large auditing teams while delivering more accurate results.

Over time, this reduces operational costs without compromising safety. Even small businesses can hire artificial intelligence developers to add these tools at a fraction of the cost of full-time staff.

In short, autonomous AI agents are a safety measure as well as a strategic asset for the business. They protect data, maintain compliance, prevent brand damage, and save costs.

For businesses aiming to build trust and scale AI adoption, partnering with Techugo, a top generative AI development company, ensures these agents are integrated effectively into every critical AI workflow.

👉Suggested Read: Healthcare Chatbot Like Google’s AMIE

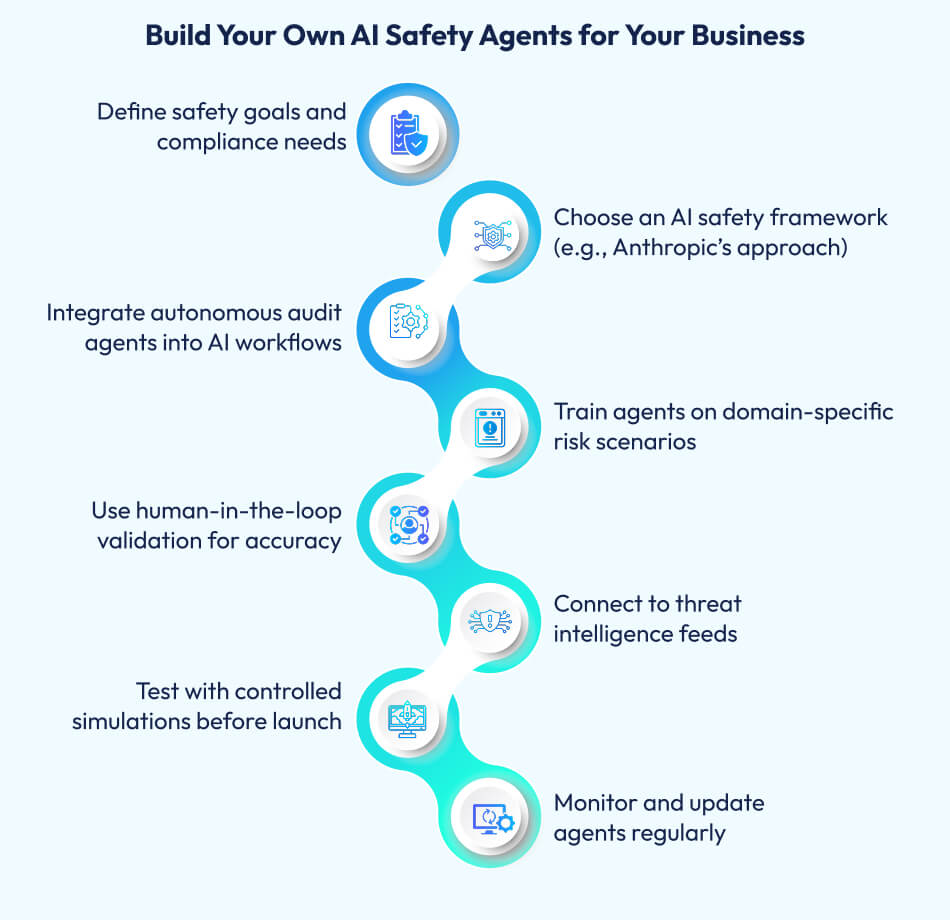

Building or deploying AI safety agents requires a clear plan, skilled developers, and the right integration strategy. Here’s a detailed guide on how businesses can get started with autonomous AI agents for safety.

Before development starts, businesses must decide what they want these agents to do. Is the goal AI model auditing for bias detection, regulatory compliance, or harmful content blocking?

For example, a healthcare provider may prioritize HIPAA compliance, while a bank may focus on fraud prevention. Clear goals make the system effective from day one.

Working with an AI agent development company is essential. They bring expertise in building autonomous AI agents that fit different industries.

Top AI app development companies like Techugo can help integrate these agents into apps, web platforms, or enterprise systems.

Safety agents need a smooth connection with existing AI tools. The AI integration model decides whether they operate inside the AI platform (embedded) or as a separate auditing layer (external).

For example, an embedded model could monitor every AI query instantly, while an external model could run batch checks at intervals.

The safety agents must be able to:

These capabilities can be trained using domain-specific datasets to make the agents industry-ready.

Even autonomous AI agents benefit from human oversight. A hybrid approach ensures high accuracy. For example, in automotive AI, agents could flag sensor errors, while engineers verify and fine-tune solutions before deployment.

Before live deployment, the agents should run in sandbox mode. This helps detect false positives, missed risks, and integration bugs. For example, a generative AI in automotive industry application could be tested on simulated road scenarios before real-world rollout.

Once deployed, these agents should have access to a threat intelligence feed and regular updates. The best mobile app development companies can integrate them into mobile dashboards for real-time monitoring and reporting. This ensures they keep pace with evolving AI risks.

By following this process, businesses can deploy enterprise AI safety solutions tailored to their needs.

If you want to build agents from scratch or adapt existing frameworks like Anthropic’s, hire our artificial intelligence developers. Either way, the goal remains the same – safe, compliant, and reliable AI operations at every level of the business.

Anthropic’s autonomous AI agents work like tireless digital watchdogs for AI. Anthropic’s AI safety agents don’t just scan for obvious mistakes. But they also dig deeper and find hidden biases, unsafe intentions, or subtle alignment issues in models like Claude. They’re built to protect both people and the integrity of your AI systems.

They use a layered approach to automated AI auditing. They test models with real-world prompts, probing for vulnerabilities, and stress-testing under high-risk scenarios. It’s like putting your AI through a crash test before letting it on the road.

Yes. While fine-tuned for Claude, they’re adaptable. Businesses can apply Anthropic’s enterprise AI safety solutions to other AI platforms. This means that you can protect any generative AI system, even if it wasn’t built by Anthropic.

You don’t have to reinvent the wheel. Partner with an AI agent development company or hire artificial intelligence developers to customize Anthropic’s approach for your needs. Whether you want an embedded safety layer or an independent AI safety agent, the blueprint exists, and you just need to adapt it.

Not at all. Beyond keeping models in check, they can strengthen generative AI and data governance, catch fraud in real time, and safeguard industries like automotive, where generative AI in the automotive industry could be the difference between safe and unsafe road decisions.

In the race to innovate, safety often feels like the slow lane. Anthropic’s autonomous AI agents prove it doesn’t have to be that way. These AI agents to audit AI models work quietly in the background, protecting people, data, and decisions. They make sure AI performs responsibly.

Whether you run a fintech startup, a global enterprise, or a company exploring generative AI in the automotive industry, safety is non-negotiable. Anthropic’s model shows that AI can monitor AI, creating systems that are both powerful and principled.

The future belongs to businesses that blend innovation with integrity. With the right AI safety agents in place, you’re avoiding risks as well as building trust that lasts.

Write Us

sales@techugo.comOr fill this form