Write Us

We are just a call away

[ LET’S TALK AI ]

X

Discover AI-

Powered Solutions

Get ready to explore cutting-edge AI technologies that can transform your workflow!

AI models in 2026 are bigger, faster, as well as far more demanding than before. They run across GPUs, clouds, and clusters that never stay still.

Training jobs grow rapidly. Inference loads jump without warning. And if the system isn’t prepared, everything slows down (including your cloud budget). This pressure feels familiar. It’s the same kind of complexity teams faced when microservices exploded years ago.

Back then, Kubernetes stepped in with a clear answer. It helped teams organize workloads, recover from failures, and scale without chaos.

Now, the same qualities make Kubernetes for AI deployment a natural fit for modern workloads. Teams struggling with scalable AI model deployment are turning to its orchestration, automation, and elasticity to stay in control.

As AI model deployment in 2026 becomes more complex, Kubernetes brings the stability that large-scale systems need.

Let’s see why & how Kubernetes is powering scalable AI model deployment in 2026, the challenges involved, as well as the best ways to deploy AI models on Kubernetes.

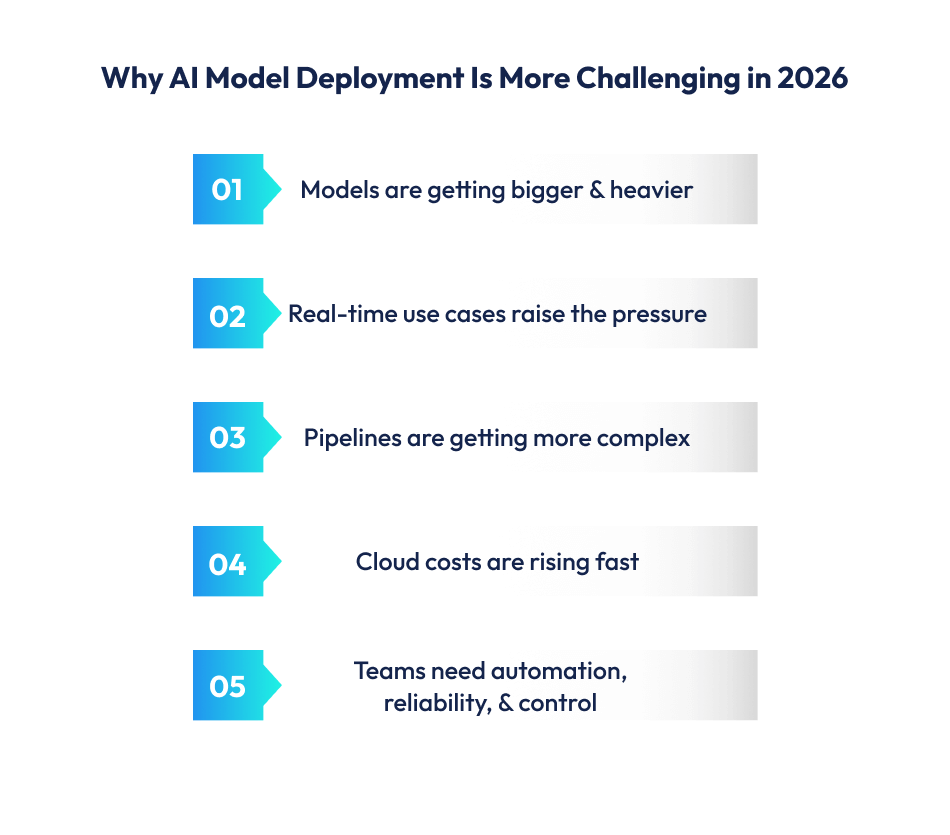

AI models in 2026 are much harder to deploy than before. They use more GPUs. They need faster inference, plus they rely on distributed setups.

Some common reasons:

All this makes AI model deployment in 2026 more demanding.

Industries now expect instant predictions. Even a small delay creates problems.

Real examples include:

These workloads make scalable AI model deployment essential, not optional.

Model training, testing, and serving must stay aligned. A tiny mismatch can break an entire pipeline.

Common issues teams face:

Without the right foundation, Kubernetes AI workloads become unpredictable and expensive.

Modern AI workloads burn compute quickly. If the system can’t scale efficiently, the cloud bill jumps.

A real case from the industry – “A global enterprise saw its inference bill double during traffic spikes because the system lacked proper workload orchestration.”

This is becoming a common pattern across companies using large models.

With larger models, faster cycles, and distributed environments, manual management isn’t possible anymore. Teams now need platforms that:

That’s what pushes companies toward Kubernetes for AI deployment as a stable, scalable solution.

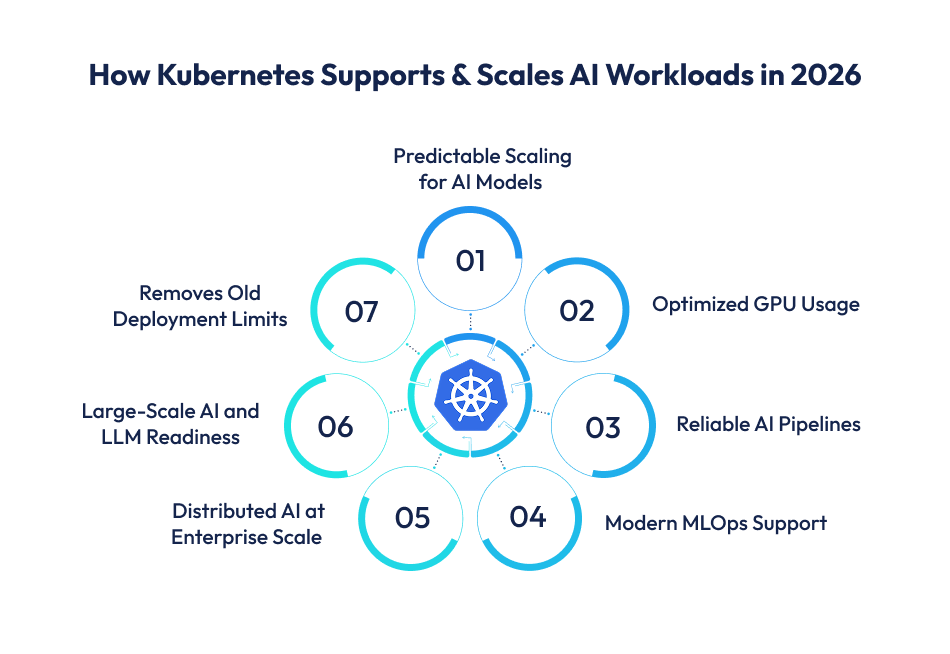

Kubernetes handles the chaos of modern AI systems.

AI systems in 2026 don’t run as a single process anymore. They move across clusters, GPUs, clouds, and edge nodes. This makes orchestration the biggest challenge.

Kubernetes solves that by giving teams one unified system to run everything.

It manages model containers, allocates GPU resources, balances traffic, and ensures workloads don’t crash under pressure.

A 2025 cloud-native report noted that over 70% of enterprises running large AI systems rely on Kubernetes for orchestration (CNCF Annual Survey 2025).

That number is growing even faster in 2026 as models become heavier.

This is why you’ll see Kubernetes being adopted widely for scalable AI model deployment in sectors like fintech, mobility, SaaS, and healthcare.

Scaling AI is harder than scaling normal microservices.

ML workloads spike suddenly.

Inference traffic isn’t steady.

And GPU resources are limited.

Kubernetes brings several advantages here:

“An APAC fintech platform cut inference latency by 38% after shifting fraud detection models to Kubernetes autoscaling. They no longer had to manually boost compute during payday spikes.”

This is the power of Kubernetes for AI deployment when done right.

GPUs are expensive.

Wasting even one GPU hour adds up.

Kubernetes offers intelligent GPU scheduling:

This prevents overprovisioning…a common problem in AI teams.

A 2026 industry review showed that companies using Kubernetes GPU scheduling cut GPU waste by up to 25–40% (Tech Monitor Cloud Efficiency Report 2026).

That’s a huge saving for teams running LLMs or vision models.

AI pipelines break easily: version mismatch, failed deployments, wrong container builds, and so on.

Kubernetes reduces this risk with:

“A machine learning team at a mobility startup once shared that their model deployments failed weekly due to environment drift. After moving to Kubernetes, deployment errors dropped by over 60% because containers stayed consistent across all stages.”

This reliability is why teams prefer Kubernetes for scalable AI model deployment instead of traditional servers.

AI in 2026 depends heavily on MLOps.

Models must train, test, validate, and deploy continuously.

Kubernetes integrates naturally with leading MLOps tools:

Companies that offer generative AI consulting services and/or MLOps consulting services often build these systems directly on Kubernetes because it supports the entire AI lifecycle.

With this setup, teams don’t manage infrastructure manually. They focus on models instead of servers.

Large organizations use multiple clouds or hybrid setups.

AI workloads need to move between them smoothly.

Kubernetes has become the backbone for:

“A retail chain in the Middle East uses Kubernetes to run edge inference in stores while managing training jobs in the cloud. This setup cut central cloud traffic by 55%, improving both performance and cost.”

This is the kind of example that shows why Kubernetes for machine learning is more practical than traditional deployment methods.

LLMs and multimodal models are a different beast.

They need distributed inference and large GPU clusters.

Kubernetes supports this with:

This is why companies building generative AI features, recommendation engines, chatbots, copilots, or search, often rely on Kubernetes.

It’s not just about running a model. It’s about scaling it smoothly without breaking pipelines.

Traditional deployment methods break down because they:

This is why many teams prefer Kubernetes vs. traditional AI deployment methods.

Kubernetes offers the automation and elasticity that AI workloads demand in 2026.

In short, Kubernetes brings structure, automation, and cost efficiency to modern AI systems.

It powers large and small workloads alike.

It scales predictions without breaking budgets.

And it gives teams a stable platform for running both classic and generative AI.

That’s why it has become one of the strongest foundations for scalable AI model deployment today, and why it will matter even more in the years ahead.

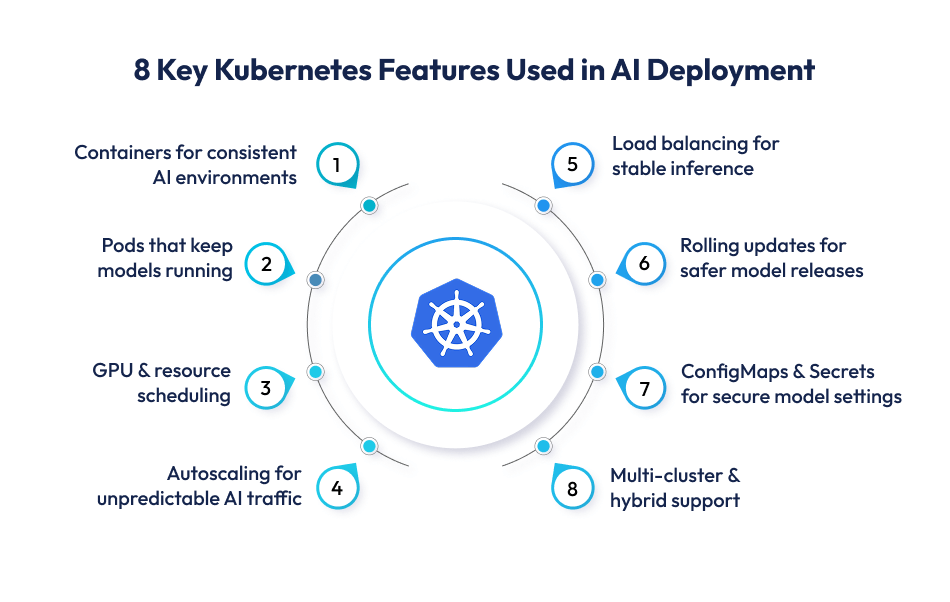

AI workloads depend on speed, consistency, and reliable scaling.

Kubernetes offers all three.

Its core features make it one of the strongest foundations for Kubernetes for AI deployment in 2026.

AI models break easily when environments don’t match.

Different libraries, CUDA versions, or driver issues can crash an entire pipeline.

Kubernetes solves this with containers.

Everything (model, code, dependencies) stays packaged together.

It runs the same way on any cluster or cloud.

“Google uses this approach internally to run large-scale ML models across multiple regions.”

This level of consistency is why containerization is now essential for scalable AI model deployment.

A Pod is the basic unit in Kubernetes. It runs one or more containers and keeps them healthy.

If a model-serving Pod crashes, Kubernetes restarts it automatically.

If traffic increases, Kubernetes creates more Pods.

This self-healing behavior is critical for AI models that must run 24/7.

“Netflix uses similar Pod strategies to make sure recommendation models never go offline during peak hours.”

AI workloads depend heavily on GPUs. Kubernetes offers GPU scheduling to make sure models get the right hardware at the right time.

It helps teams:

For AI teams managing multiple models, this solves a major bottleneck.

“Many cloud-native companies shifted to Kubernetes because they saw up to 30% less unused GPU time after scheduling optimization.”

This benefit directly supports Kubernetes AI workloads where GPU efficiency matters.

AI traffic is never stable. Fraud detection spikes at midnight. E-commerce spikes happen during sales. Healthcare queries rise during emergencies.

Kubernetes handles this with autoscaling:

With these tools, models scale instantly when needed and shrink when demand drops.

This keeps AI model deployment in 2026 efficient and cost-controlled.

AI inference cannot slow down when requests increase.

Kubernetes load balancers distribute traffic across Pods so all model instances stay responsive.

If one Pod gets too busy, Kubernetes routes traffic to another.

This helps avoid downtime, especially during high-demand moments.

Companies like Spotify and DoorDash use Kubernetes load balancing to deliver real-time ML predictions without delay.

Updating an AI model is risky. A bad update can break predictions instantly.

Kubernetes makes updates safer with:

Teams test a small percentage of traffic on the new model first.

If it performs well, Kubernetes rolls it out fully.

If it fails, Kubernetes rolls back automatically.

This feature is widely used in MLOps workflows and supports the reliability needed for Kubernetes for scalable AI model deployment.

AI models depend on configurations, API keys, as well as environment variables, and Kubernetes stores them securely using ConfigMaps and Secrets to keep sensitive data safe and separated from code.

Enterprises building generative AI systems (recommendation engines, AI copilots, or chatbots) rely on this feature to manage tokens and configuration files safely across environments.

Many companies run AI across multiple clouds and environments.

Kubernetes supports this by managing clusters as a single system.

This is useful for:

Retail companies often run model inference at the edge (inside stores) while training models in the cloud. Kubernetes makes that flow seamless.

In short, these core features make Kubernetes a strong foundation for AI teams in 2026. They remove the friction, automate the heavy lifting, and keep models running reliably.

That’s why more teams are choosing Kubernetes for everything from ML experiments to large-scale generative AI deployments.

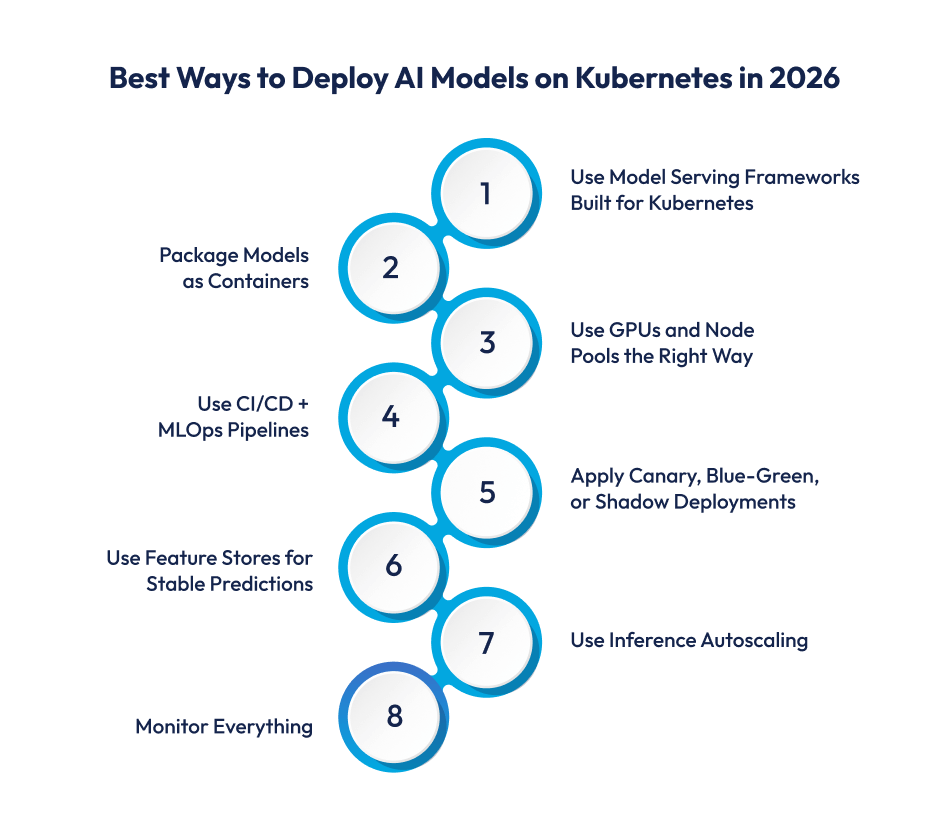

Deploying AI models on Kubernetes in 2026 has become smoother, but teams still need the right approach to handle growing Kubernetes AI workloads.

The methods below are what leading companies use today for reliable and scalable AI model deployment.

Tools like KServe, Seldon Core, and Ray Serve make Kubernetes for AI deployment far more efficient.

They support autoscaling, GPU allocation, and safe rollout strategies from day one.

“Netflix uses containerized serving frameworks to update recommendation models without service interruptions.”

Containerization is still the simplest way to achieve scalable AI model deployment.

Teams package the model and dependencies into containers so they behave the same across clusters and clouds.

This approach also supports containerization for AI models in large ML pipelines.

Kubernetes makes GPU usage smarter. Teams rely on:

Tech giants like Google use these patterns to control cost across massive training pipelines.

Modern AI needs automation.

Teams now combine Kubernetes with MLOps on Kubernetes pipelines like Kubeflow, MLflow, Argo Workflows, or Tekton to automate training and deployment.

This approach also helps enterprises that rely on generative AI consulting or MLOps consulting services to streamline training, testing, and deployment workflows.

Kubernetes offers safe ways to roll out new models. Teams commonly use:

Banks use these strategies before pushing new fraud-detection models into production.

Feature stores like Feast and Tecton integrate well with Kubernetes for machine learning pipelines.

They ensure consistent features during training and inference.

This reduces drift and improves long-term reliability.

Autoscalers in Kubernetes (HPA, VPA, and KEDA) keep AI services stable during traffic spikes.

It’s essential for applications like chatbots, ecommerce recommendations, and real-time document scanning.

This is also a big reason companies choose Kubernetes for scalable AI model deployment over traditional methods.

Kubernetes makes end-to-end monitoring easier. Teams use:

These tools track latency, GPU load, and model drift which are critical for AI workloads running at scale.

Any leading generative AI development company, including Techugo, uses this monitoring layer to keep AI workloads stable, efficient, and predictable in production.

AI deployment in 2026 is no longer about running models. It is about handling scale, performance, cost, and reliability without losing speed.

Kubernetes has become the backbone for this shift. It brings automation, elasticity, and stability to AI teams that need to move fast and stay consistent.

But getting AI models production-ready on Kubernetes still needs the right engineering support.

That’s where Techugo comes in.

Techugo, as a leading generative AI development company, helps businesses build, deploy, and scale AI solutions with confidence.

Startups, enterprises, and government organizations rely on Techugo to turn complex AI workloads into stable, scalable systems.

From generative AI integration services to MLOps consulting services, Techugo team ensures models are trained, containerized, and deployed using the best Kubernetes practices.

If you’re planning to bring AI into your product or want to modernize how your models run in production, Techugo can help you build it the right way…and scale it when it matters most.

Kubernetes offers automation, scaling, and workload orchestration, which AI teams need for heavy training and inference tasks. It makes deployment stable across clusters and clouds. This is why many companies now prefer Kubernetes for AI deployment instead of traditional VM-based setups.

Yes. Kubernetes supports GPU scheduling, GPU node pools, and automatic scaling. It can spin up GPUs when needed and shut them down when workloads drop. This makes GPU-based training and inference more cost-efficient and reliable in Kubernetes AI workloads.

For large AI models and high-traffic workloads, yes. Kubernetes provides better autoscaling, rollout control, and resource management. Serverless works for smaller models, but Kubernetes offers more flexibility for scalable AI model deployment.

Companies use Kubernetes to run training pipelines, manage inference at scale, automate MLOps, and keep compute costs predictable. Netflix uses containerized ML workflows. Google uses Kubernetes to manage distributed training workloads. Banks rely on it for stable fraud-detection inference.

Teams commonly use:

Write Us

sales@techugo.comOr fill this form